Risk Profiling for Social Welfare Reexamination

Algoprudence number

AA:2023:02

Key takeaways normative advice commission

- Algorithmic profiling is possible under strict conditions

The use of algorithmic profiling to re-examine whether social welfare benefits have been duly granted, is acceptable if applied responsibly. - Profiling must not equate suspicion

Re-examination needs to be based more on service and less on distrust. - Diversity in selection methods

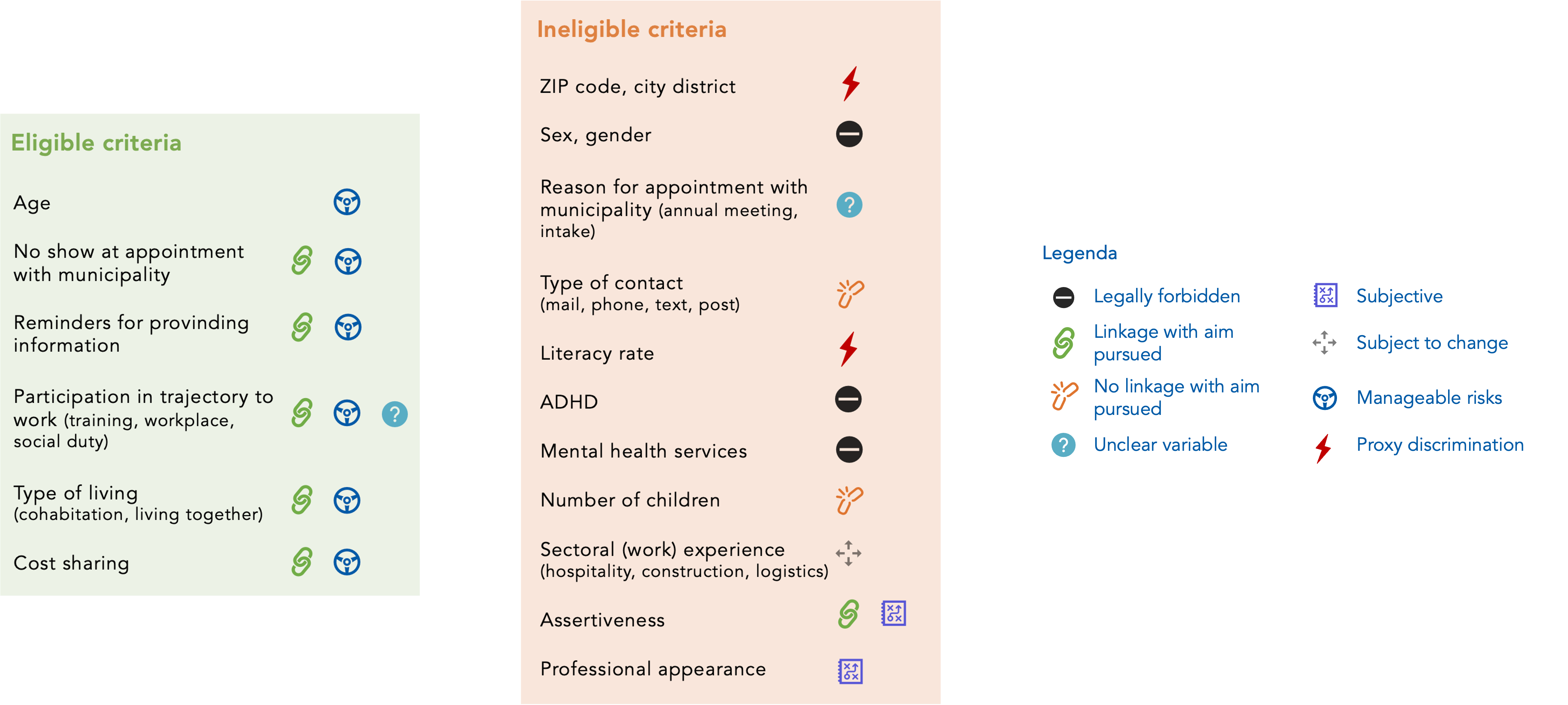

To avoid tunnel vision and negative feedback loops, algorithmic profiling ought to be combined with expert-driven profiling and random sampling. - Well-considered use of profiling criteria

Caring to avoid (proxy) discrimination and other undesirable forms of differentiation, the normative advice commission assessed variables individually on their eligibility for profiling (see Infographic). - Explainability requirements for machine learning

It is necessary that the sampling of residents can be explained throughout the entire decision-making process. Complex training methods for variable selection, such as the xgboost algorithm discussed in this case study, are considered too complex to meet explainability requirements.

Infographic

Summary advice

The commission judges that algorithmic risk profiling can be used under strict conditions for sampling residents receiving social welfare for re-examination. The aim of re-examination is a leading factor in judging profiling criteria. If re-examination were based less on distrust and adopts a more service-oriented approach, then the advice commission judges a broader use of profiling variables permissible to enable more precise targeting of individuals in need of assistance. For various variables used by the Municipality of Rotterdam during the period 2017-2021, the commission gives an argued judgement why these variables are or are not eligible as a profiling selection criterion (see Infographic). A combined use of several sampling methods (including expert-driven profiling and random sampling) is recommended to avoid tunnel vision and negative feedback loops. The commission advises stricter conditions for the selection of variables for use by algorithms than for selection by domain experts. The commission states that algorithms used to sample citizens for re-examination must be explainable. Complex training methods, such as the xgboost model used by the Municipality of Rotterdam, do not meet this explainability criterion. This advice is directed towards all Dutch and European municipalities that use or consider using profiling methods in the context of social services.

Source of case

Unsolicited research, build upon freedom of information requests submitted by investigative journalists.

Presentation

The advice report (AA:2023:02:A) has been presented to the Dutch Minister of Digitalization on November 29, 2023. A press release can be found here.

Report

Dowload the full report and problem statement here.

Normative advice commission

- Abderrahman El Aazani, Researcher at the Ombudsman Rotterdam-Rijnmond

- Francien Dechesne, Associate Professor Law and Digital Technologies, Leiden University

- Maarten van Asten, Alderman Finance, Digitalization, Sports and Events Municipality of Tilburg

- Munish Ramlal, Ombudsman Metropole region Amsterdam

- Oskar Gstrein, Assistant Professor Governance and Innovation, University of Groningen

Funded by

Questions raised in the city council of Amsterdam as a result of advice report

Description

Council members submitted questions whether the machine learning (ML)-driven risk profiling algorithm currently tested by the City of Amsterdam satisfies the requirements as set out in AA-2023:02:A, including:

- (in)eligible selection criteria fed to the ML model

- explainability requirements for the used explainable boosting algorithm

- implications of the AIAct for this particular form of risk profiling.

Binnenlands Bestuur

Description

News website for Dutch public sector administration reported on AA:2023:02:A. See link.

Reaction Netherlands Human Rights Institute on age discrimination

Age Discrimination

Policies, such as those implemented by public sector agencies investigating (un)duly granted social welfare or employers seeking new employees, can intentionally or unintentionally lead to differentiation between certain groups of people. If an organization makes this distinction based on grounds that are legally protected, such as gender, origin, sexual orientation, or a disability or chronic illness, and there is no valid justifying reason for doing so, then the organization is engaging in prohibited discrimination. We refer to this as discrimination.

But what about age? Both the Rotterdam-algorithm and DUO-algorithm, as studied by Algorithm Audit, differentiated based on age. However, in these cases, age discrimination does not occur.

EU non-discrimination law also prohibits discrimination on the basis of age. For instance, arbitrarily rejecting a job applicant because someone is too old is not unlawful. However, legislation regarding age differentiation allows more room for a justifying argument than for the aforementioned personal characteristics. This is especially true when the algorithm is not applied in the context of labor.

Therefore, in the case of detecting unduly granted social welfare or misuse of college loan, it is not necessarily prohibited for an algorithm to consider someone’s age. However, there must be a clear connection between age and the aim pursued. Until it is shown that someone’s age increases the likelihood of misuse or fraud, age is ineligible as a selection criteria in algorithmic-driven selection procedures. For example, pertaining to disability allowances for youngsters (Wajong) a clear connection exists and an algorithm can lawfully differentiate upon age.

React to this normative judgement

Your reaction will be sent to the team maintaining algoprudence. A team will review your response and, if it complies with the guidelines, it will be placed in the Discussion & debate section above.