Bias detection tool – What is it?

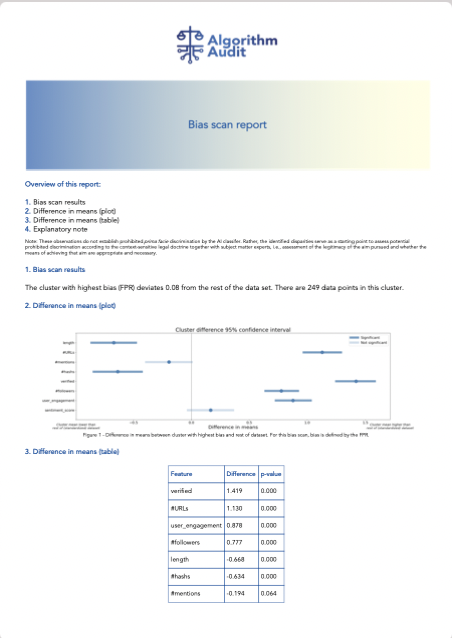

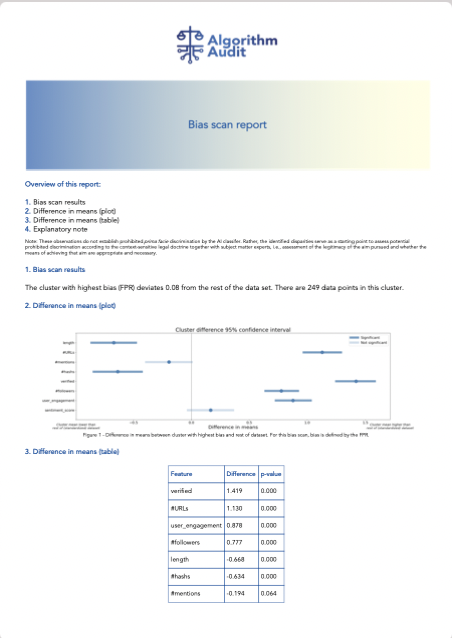

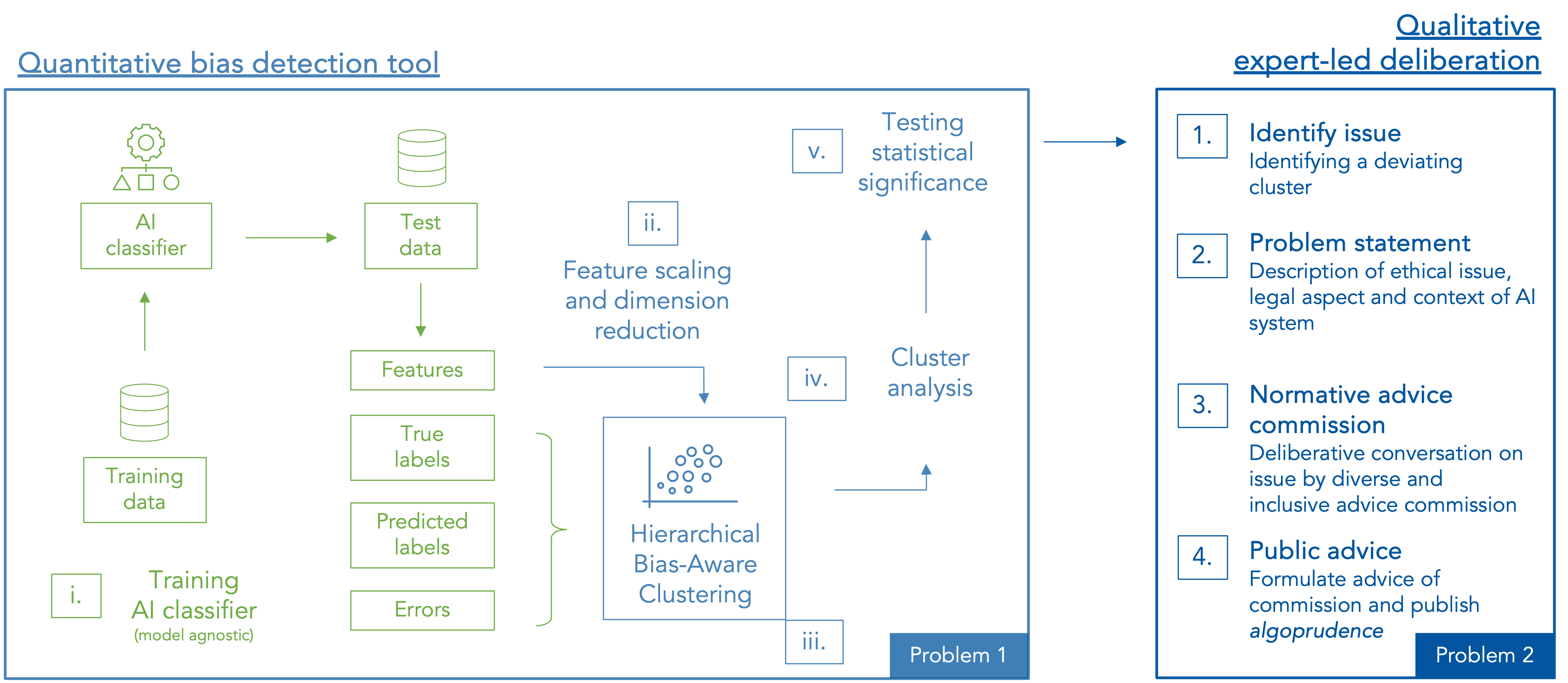

This bias detection tool identifies potentially unfairly treated groups of similar users by a binary algorithmic classifier. The tool identifies clusters of users that face a higher misclassification rate compared to the rest of the data set. Clustering is an unsupervised ML method, so no data is needed is required on protected attributes of users, e.g., gender, nationality or ethnicity. The metric by which bias is defined can be manually chosen in advance: False Negative Rate (FNR), False Positive Rate (FPR), or Accuracy (Acc). Because the tool utilizes statistics, it is able detect higher-dimensional form of apparently neutral differentiation, also referred to as higher-dimensional proxy or intersectional discrimination

The tool returns a report which presents the cluster with the highest bias and describes this cluster by the features that characterizes it. This is quantitatively expressed by the (statistically significant) differences in feature means between the identified cluster and the rest of the data. The report also visualizes the outcomes.

Try the tool below ⬇️

Bias detection tool

Example output bias detection tool

Normative judgement commission

An advice commission believes there is a low risk of (higher-dimensional) proxy discrimination by the BERT-based disinformation classifier

FPR clustering results

An example report for the BERT-based disinformation detection (FPR) case study

Normative judgement commission

An advice commission believes there is a low risk of (higher-dimensional) proxy discrimination by the BERT-based disinformation classifier

FPR clustering results

An example report for the BERT-based disinformation detection (FPR) case study

Finalist Stanford’s AI Audit Challenge 2023

Under the name Joint Fairness Assessment Method (JFAM) our bias scan tool has been selected as a finalist in Stanford’s AI Audit Competition 2023.

OECD Catalogue of Tools & Metrics for Trustworthy AI

Algorithm Audit’s bias detection tool is part of OECD’s Catalogue of Tools & Metrics for Trustworthy AI.

Hierarchical Bias-Aware Clustering (HBAC) algorithm

The bias detection tool currently only works for numeric data. According to a hierarchical search methodology, the Hierarchical Bias-Aware Clustering (HBAC) algorithm processes input data according to k-mean clustering algorithm. In the future, the tool will also be able to process categorical data. The HBAC-algorithm is introduced by Misztal-Radecka and Indurkya in a scientific article as published in Information Processing and Management (2021). Our implementation of the HBAC-algorithm can be found on Github.

Download an example data set to use the bias scan tool.

Input data

What input does the bias scan tool need? A csv file of max. 5GB with feature columns (features), predicted labels by the classifier (pred_label) and ground truth labels (true_label). Only the order of the columns is important (first features, than pred_label, than true_label). All column values should be numeric and unscaled, i.e., not normalized or standardized. In sum:

features: unscaled numeric values, e.g.,feature_1,feature_2, …,feature_n;pred_label: 0 or 1;true_label: 0 or 1;- Bias metric: False Positive Rate (FPR), False Negative Rate (FNR) or Accuracy.

Data snippet:

| feat_1 | feat_2 | ... | feat_n | pred_label | true_label |

|---|---|---|---|---|---|

| 10 | 1 | ... | 0.1 | 1 | 1 |

| 20 | 2 | ... | 0.2 | 1 | 0 |

| 30 | 3 | ... | 0.3 | 0 | 0 |

Overview of supported bias metrics:

| Metric | Description |

|---|---|

| False Positive Rate (FPR) | The bias detection tool finds the cluster for which most true labels are predicted to be false, proportional to all true labels (False Positive Rate). For instance, the algorithm predicts a financial transaction to be riskful, while after manual inspection it turns out to be not riskful. |

| False Negative Rate (FNR) | The bias detection tool finds the cluster for which most false labels are predicted to be true, proportional to all false labels (False Negative Rate). For instance, the algorithm predicts a financial transaction not to be riskful, while after manual inspection it turns out it is riskful. |

| Accuracy | Sum of True Positives (TPs) and True Negatives (TNs), proportional to all predictions. |

FAQ

Why this bias detection tool?

- No data needed on protected attributes of users (unsupervised bias detection);

- Model-agnostic (AI binary classifiers only);

- Informs human experts which characteristics of AI-sytem behavior should manually be scrutinized;

- Connecting quantitative, statistical tools with the qualitative doctrine of law and ethics to assess fair AI;

- Developed open-source and not-for-profit.

By whom can the bias detection tool be used?

The bias detection tool allows the entire ecosystem involved in auditing AI, e.g., data scientists, journalists, policy makers, public- and private auditors, to use quantitative methods to detect bias in AI systems.

What does the tool compute?

A statistical method is used to compute which clusters are relatively often misclassified by an AI system. A cluster is a group of data points sharing similar features. On these features the AI-system is initially trained. The tool identifies and visualizes the found clusters automatically. The tool also assesses how individuals in a deviating cluster differ (in terms of the provided features) from others outside the cluster. If the differences are statistically significant is directly tested by means of Welch’s two-samples t-test for unequal variances. All results kan directly be downloaded as a pdf file.

The tool detects prohibited discrimination in AI?

No. The bias detection tool serves as a starting point to assess potentially unfair AI classifiers with the help of subject-matter expertise. The features of identified clusters are examined on critical links with protected grounds, and whether the measured disparities are legitimate. This is a qualitative assessment for which the context-sensitive legal doctrine provides guidelines, i.e., to assess the legitimacy of the aim pursued and whether the means of achieving that aim are appropriate and necessary. In a case study of Algorithm Audit – in which the bias detection tool was tested on a BERT-based disinformation classifier – a normative advice commission argued that the measured quantitative deviations could be legitimised. Legitimisation of unequal treatment is a context-sensitive taks for which legal frameworks exist, such an assessment of proportionality, necessity and suitability. This qualitative judgement will always be a human task.

For what type of AI does the tool work?

Currently, only binary classification algorithms can be reviewed. For instance, prediction of loan approval (yes/no), disinformation detection (true/false) or disease detection (positive/negative).

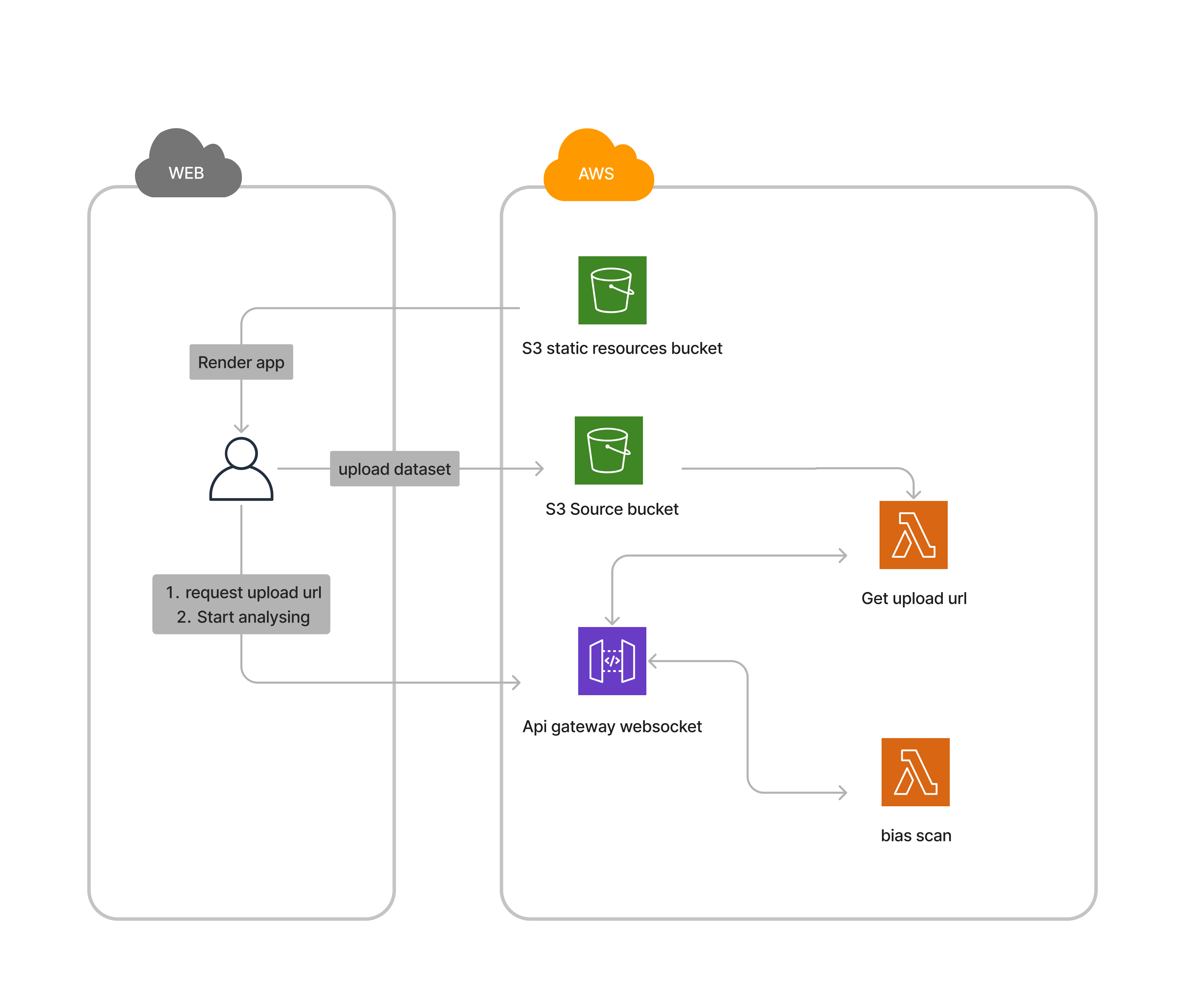

What happens with my data?

Your csv file is uploaded to a Amazon Web Services (AWS) bucket, where it is processed by Python code. Once the HBAC-algorithm has identified clusters, the results are sent back to the browser and the data is immediately deleted. Usually, your data is stored only for 5-10 seconds in the cloud environment. The web application is built according to the below architecture diagram.

In sum

Quantitative methods, such as unsupervised bias detection, are helpful to discover potentially unfair treated groups of similar users in AI systems in a scalable manner. Automated identification of cluster disparities in AI models allows human experts to assess observed disparities in a qualitative manner, subject to political, social and environmental traits. This two-pronged approach bridges the gap between the qualitative requirements of law and ethics, and the quantitative nature of AI (see figure). In making normative advice, on identified ethical issues publicly available, over time a repository of case reviews emerges. We call case-based normative advice for ethical algorithm algoprudence. Data scientists and public authorities can learn from our algoprudence and can criticise it, as ultimately normative decisions regarding fair AI should be made within democratic sight.

Read more about algoprudence and how Algorithm Audit’s builds it.

Bias Detection Tool Team

Floris Holstege

PhD-candidate Machine Learning, University of Amsterdam

Joel Persson PhD

Research Scientist, Spotify

Kirtan Padh

PhD-candidate Causal Inference and Machine Learning, TU München

Krsto Proroković

PhD-candidate, Swiss AI Lab IDSIA

Mackenzie Jorgensen

PhD-candidate Computer Science, King’s College London